Security and Privacy of Machine Learning

University of Virginiacs6501 Seminar Course, Spring 2018

Coordinator: David Evans

Thanks for a great semester!

Here’s the topics we covered:

Class 1: Intro to Adversarial Machine Learning

Class 2: Privacy in Machine Learning

Class 3: Adversarial Machine Learning

Class 4: Differential Privacy In Action

Class 5: Adversarial Machine Learning in Non-Image Domains

Class 6: Measuring Robustness of ML Models

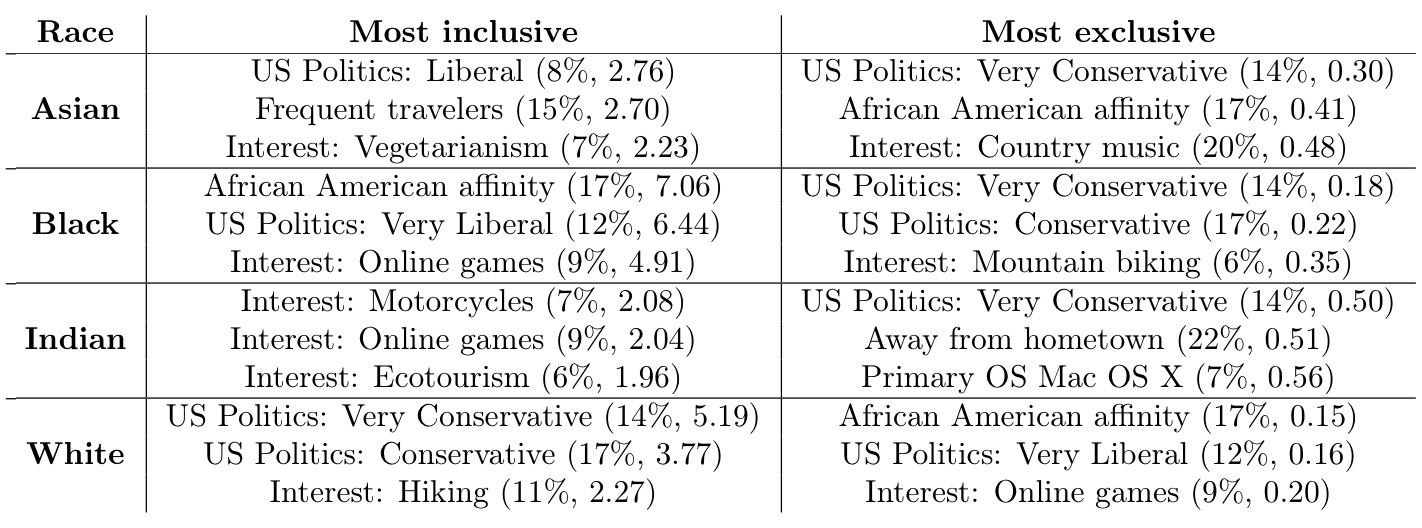

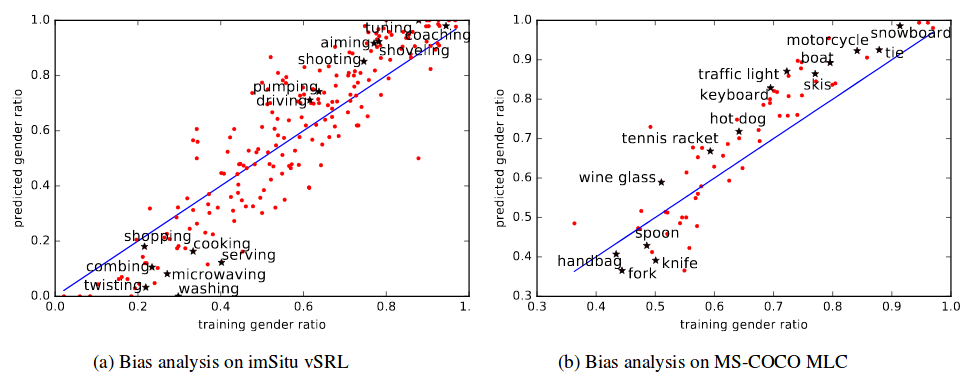

Class 7: Biases in ML, Discriminatory Advertising

Class 8: Testing of Deep Networks

Class 9: Adversarial Malware Detection

Class 10: Formal Verification Methods

Class 11: Poisoning

This week we discussed poisoning attacks, which differ from previously-discussed attacks in a key way. Instead of finding test instances that the target model misclassifies, a poisoning attack adds “poisoned” instances to the training set, introducing new errors into the model. Below are three papers which discuss interesting work happening in this field.

Poison Frogs!

Ali Shafahi, W. Ronny Huang, Mahyar Najibi, Octavian Suciu, Christoph Studer, Tudor Dumitras, and Tom Goldstein. Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks. April 2018. arXiv e-print [PDF]

A Simple Clean Label Attack

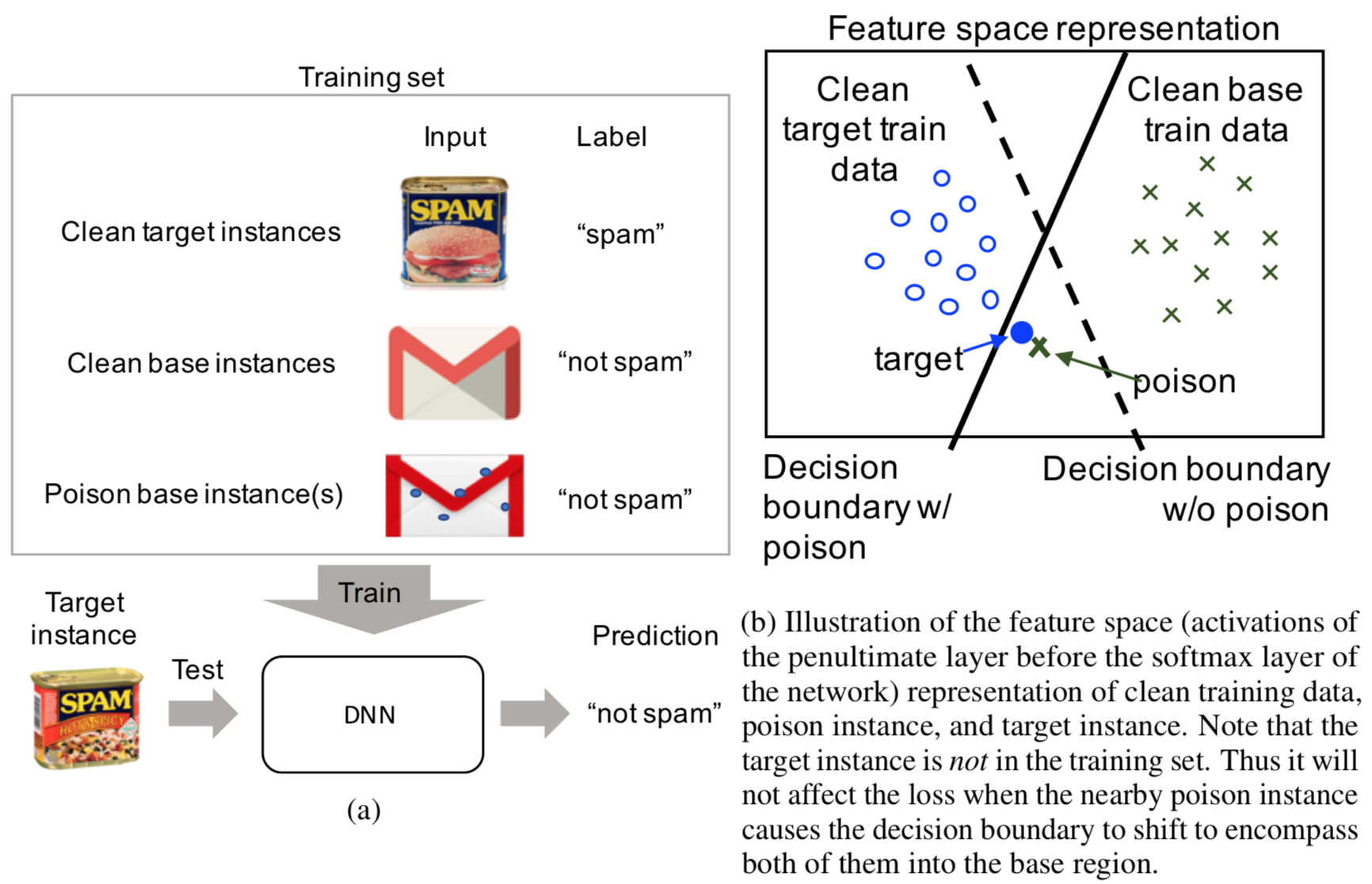

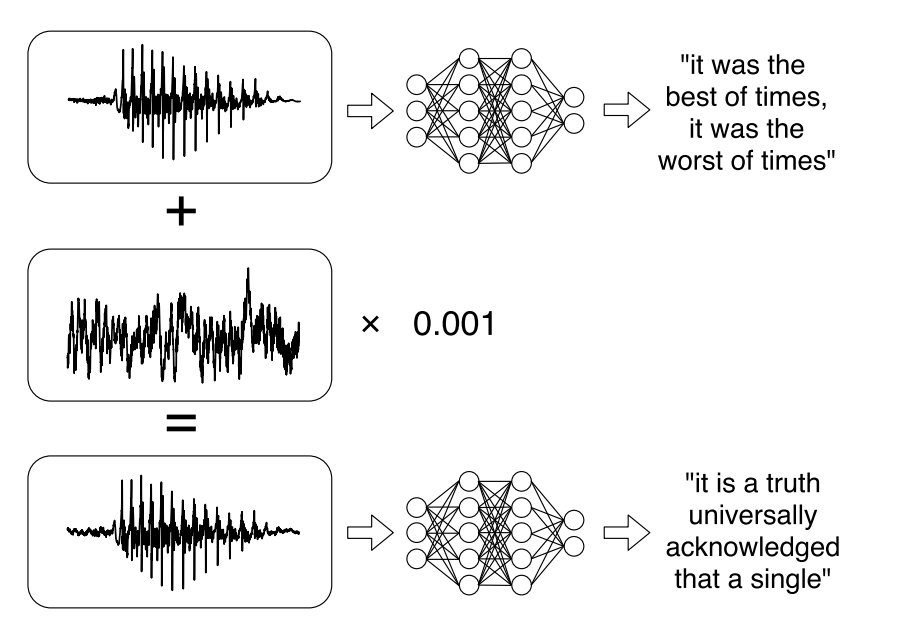

The paper presents a novel clean-label attack, which restricts the attacker to injecting correctly-classified examples into the victim’s training set. The goal of the attacker is to make the model misclassify a “target” instance, specifically to the same class as some chosen “base” instance. The attack is executed by subtly changing the base instance to display features of the target; this is illustrated in Figure (a) below. Figure (b) shows a simple diagram of the intended effect on the model’s decision boundary. When the model trains, it hopefully overfits on the poisoned instance, thereby allowing the target to be classified incorrectly. The major benefit of this approach is that it is difficult for the victim to detect: since the poisoned data is still labeled correctly, the model’s test accuracy should not change.

In more formal language, the clean-label attack does this: given a target instance \(t\) and a base instance \(b\), create a poisoned instance \(p\) such that

- \(p\) is humanly-indistinguishable from \(b\) (and is classified the same), and

- the model, after training on a data set that includes \(p\), misclassifies \(t\) to be in the same class as \(b\).

The equivalent optimization problem is

where \(\beta\) represents how closely \(p\) resembles the base instance \(b\). There is a simple algorithm for solving this optimization problem: alternate between “forward steps” to inch closer to \(t\) and “backward steps” to stay close to \(b\).

The clean-label attack works extremely well on transfer learning models, which contain a pre-trained feature extraction network hooked up to a trainable, final classification layer. As an example, the authors created an InceptionV3 model that classified images of dogs and fish. They were able to attack this model a 100% success rate by including just one poisoned image in each attack; furthermore, the target images were misclassified by the model with high confidence:

Watermarking Attack on End-to-End Training

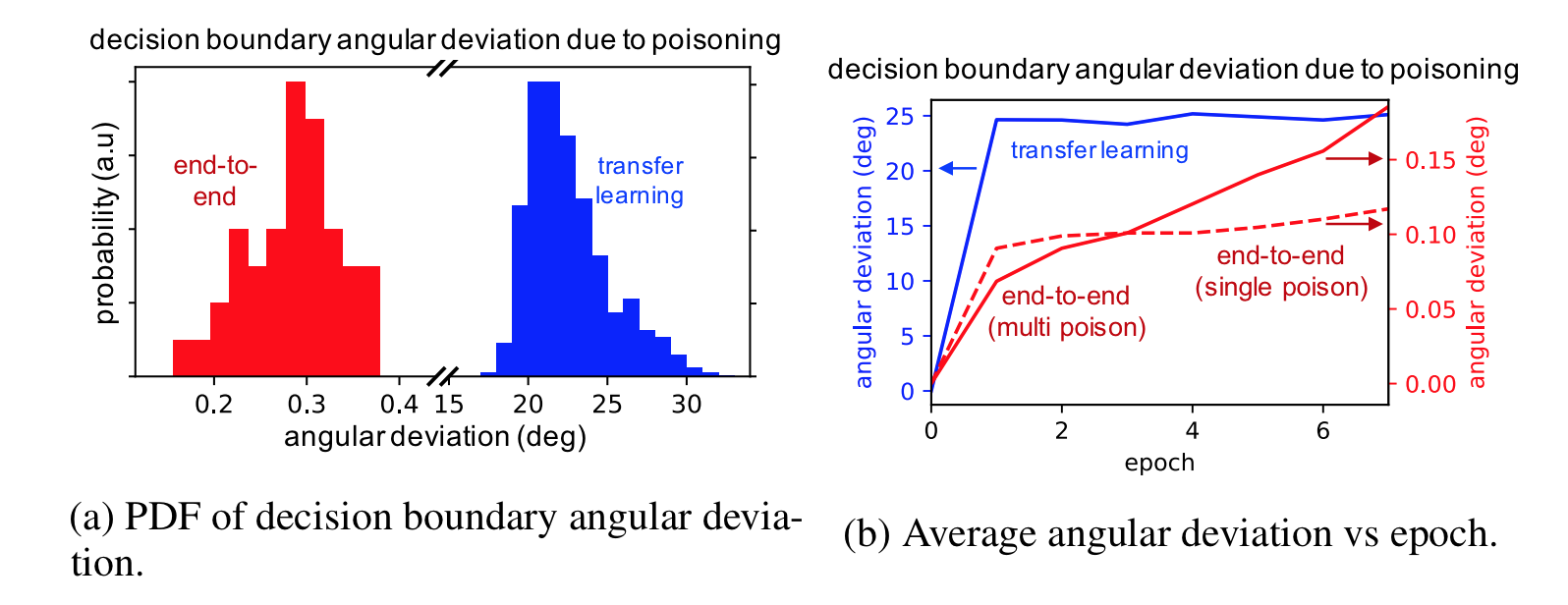

The poisoning attack above is effective when the early feature extraction layers of a network are not retrained (as in transfer learning), but is not as effective when training end-to-end. The figure below shows this effect measured by the angular deviation of the decision boundary. The angular deviation is the angular difference between the decision boundary of a clean and poisoned network. A high angular deviation indicates the poisoning attack was strong, causing the decision boundary to significantly shift. We see that the poisoning attack we’ve discussed does not shift the decision boundary on end-to-end learning nearly as much as it does for transfer learning.

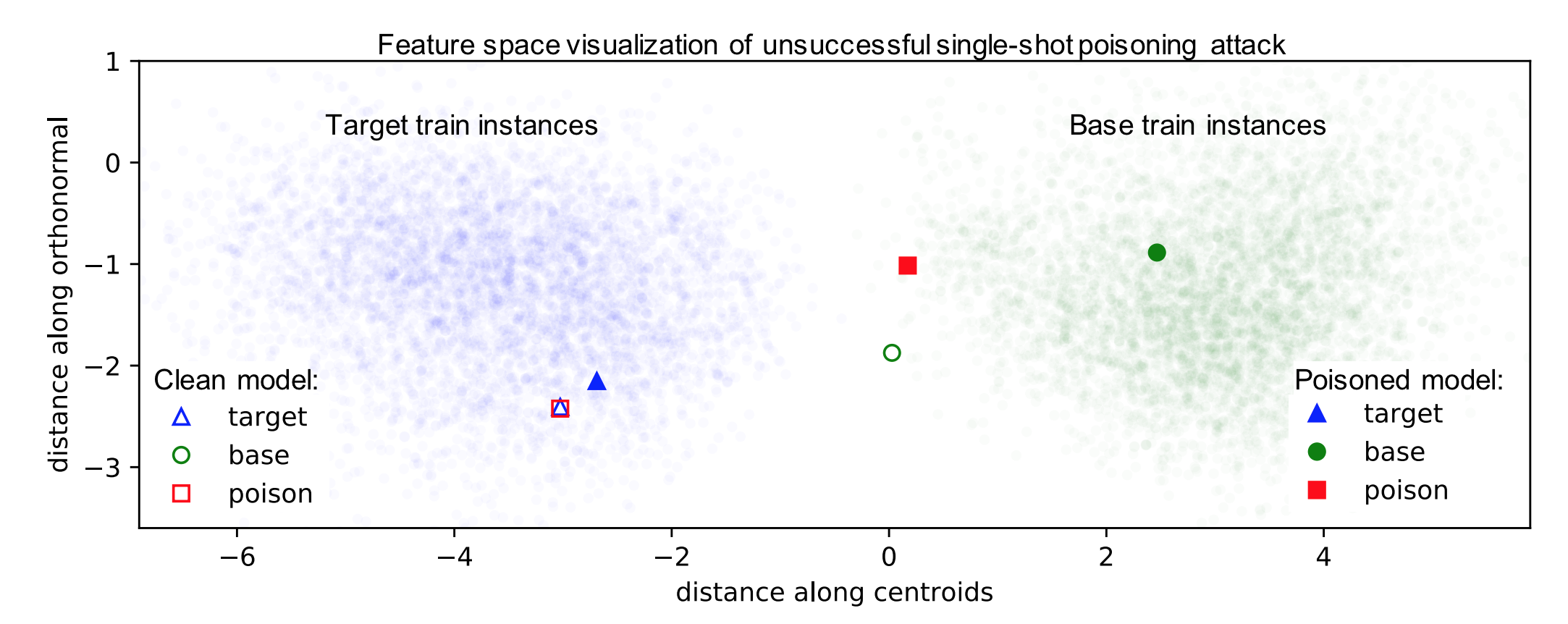

The figure below shows the feature space representations of the target, base, and poison instances in the context of their training data for a single-shot poisoning attack. The clean model shows the target and poison instance overlapping – this indicates the optimization algorithm to find a poison instance works. However, we see that retraining the network separates the poison and target instances and returns the poison instance to the base class.

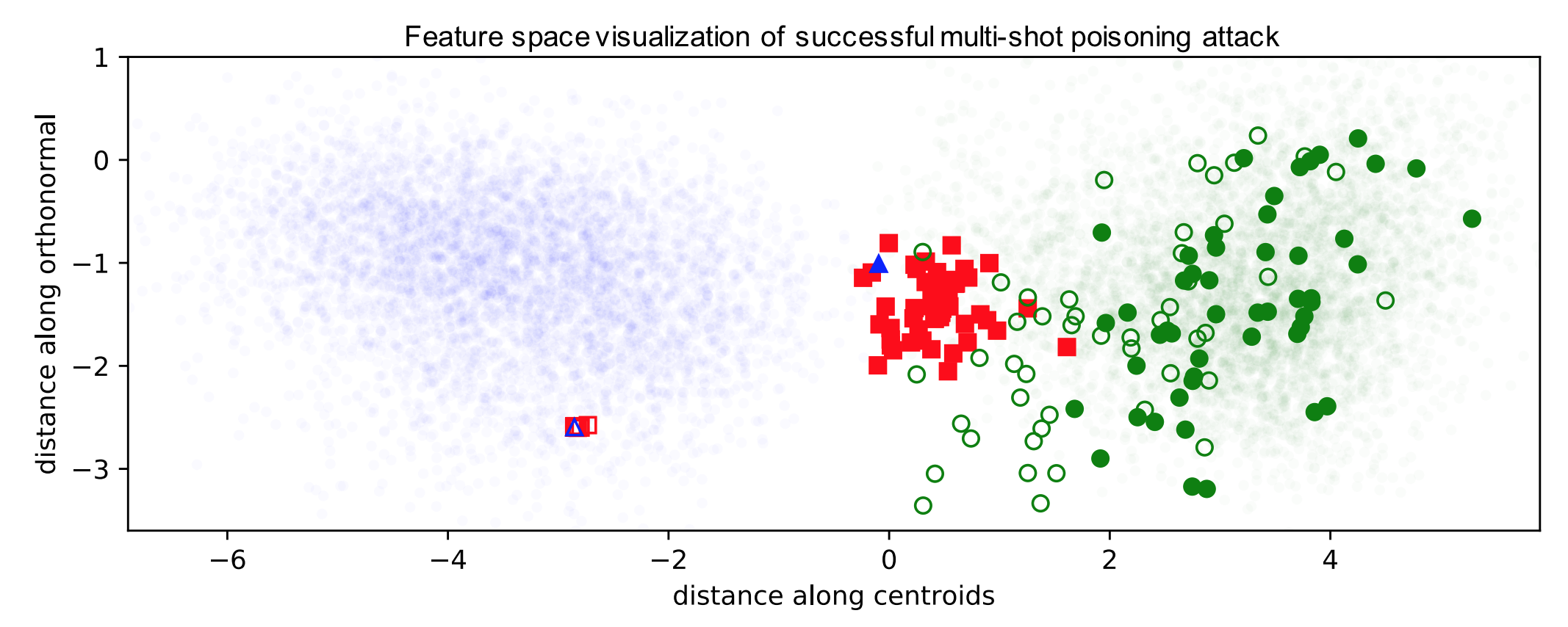

To fix this, the authors use a technique called watermarking. Watermaking blends additional features of the target instance into the poison instance in a way humans can’t notice. In particular, a low opacity watermark of the target instance is added to the poison instances. Watermarks are not noticeable to humans up to 30% opacity. The figure below shows how constructing 50 poison instances from 50 random base instances casuses the target instances to be pulled out of the target feature space into the base class feature space, resulting in incorrect classifications.

We see below 60 images of dogs with watermarks of birds at 30% opacity. These poison instances successfully cause a target image of a bird to get misclassified as a dog with end-to-end training.

The authors found that targeting outlier instances has a 17% higher success rate. Since these targets lie far from other training samples in their class, they are close to the decision boundary, and it is therefore easier to flip their class label. Additionally, the authors found that the attack success rate was higher when more poison instances were included in the retraining and when the watermark had a higher opacity.

Poisoning Regression Learning

Matthew Jagielski, Alina Oprea, Battista Biggio, Chang Liu, Cristina Nita-Rotaru and Bo Li. Manipulating Machine Learning: Poisoning Attacks and Countermeasures for Regression Learning. April 2018. arXiv e-print [PDF] (IEEE Symposium on Security and Privacy 2018)

It is well understood that machine learning models are easy to be manipulated by smart attackers. There are both test-time evasion attacks and training-time data poisoning attacks, so studying and understanding these potential risks is beneficial for the application of machine learning in security critical domains.

In this paper, the authors studied the specific problem of training a data poisoning attack on linear regression models and subsequently proposed an effective defensive strategy.

A linear regression is defined as

And the loss function being optimized during the training time is the mean-squared error (MSE), which is defined as:

MSE is used here as the result of linear regression and is a continuous value, while a traditional classification task outputs discrete categorical values. The term \(\Omega(\mathbf{w})\) denotes the regularization terms. When we take different norms, it corresponds to different regularization functions. (\(L\)2-norm corresponds to ridge regression, \(L\)1-norm to LASSO regression, and Elastic-net Regression takes the linear combination of \(L\)1-norm and \(L\)2-norm).

Optimization-based Poisoning Attack

The attack aims to corrupt the machine learning in the training phase by introducing noisy training data points such that predictions at test time will be influenced. The author considered both the white-box and black-box attack. White-box attack means the attacker is aware of all the information including training dataset, feature values, training algorithm and trained parameters. In the black-box setting, the training dataset is assumed to be unknown. With all these described, we arrive at the following formulation regarding training data poisoning attack:

where \(\mathbf{\theta}{p}^{*}\) is the model parameter obtained by training the model on poisoned training dataset. \(D{tr}\) denotes unpoisoned training data, and \(D_{p}\) are the poisoned training data, which typically takes around \(10%\) of the clean training data. \(W(D^{'},\mathbf{\theta}_{p}^{*})\) is the loss function defined on the test (or validation) dataset \(D^{'}\), which is free from the poisoning attacks. (Sorry, can’t fix the mathjax typesetting for this paragraph; please check the original paper if confused.)

Note that this is a bilevel optimization problem and is different from the traditional optimization we know, in that the constraint itself is an optimization problem. Also, the parameter \(\theta_{p}^{*}\) depends implicitly on the poisoned training dataset \(D_{p}^{*}\).

To optimize the above problem with respect to a set of data points \(D_{p}\), the approach is to optimize each instance \(\mathbf{x}_{c}\) in the set \(D_{p}\). With this, the authors apply gradient descent algorithms to find an optimal \(\mathbf{x}_c\) that can maximize the loss function \(W(\cdot)\). The gradient can be calculated as below:

The calculation of first term is non-trivial as there is an implicit dependence of \(\mathbf{\theta}\) and \(\mathbf{x}_c\). With some tricks played by KKT equilibrium conditions, the author arrived at this equation:

Combining the above two, we are able to approximately compute the gardient and update \(\mathbf{x}c\). Notably, unlike previous papers on training data poisoning attacks on classifiers, the problem setting of this paper is in linear regression, and the authors further propose to optimize both the data point \(\mathbf{x}c\) and its response variable \(y_c\). Hence, a new variable \(\mathbf{z}{c}= (\mathbf{x}{c},y_{c})\) is introduced to replace \(\mathbf{x}_c\). Details of the gradient of \(W\) with respect to variable \(\mathbf{z}_{c}\) can be referred from equation (14) and equation (4).

Statistical-based Poisoning Attack

Statistical Attack contrasts the previous optimization based attack. Statistical attack is computationally efficient and it operates by generating adversarial training points from a multivariate normal distribution with mean and covariance estimated from the training data. Then, the feature values are rounded to corner values and the finally the response value \(y_{c}\) for a given data point \(\mathbf{x}_c\) is rounded to the boundary value (either 1 or 0). As can be seen from the description, it is computationally effective but less accurate than the optimization based method.

TRIM algorithm

This is the defense method proposed by the author to tackle the two above-mentioned attack strategies. The TRIM algorithm operates by iteratively estimating the regression parameters while at the same time training on a subset of points with lowest residuals in each iteration. Here, residual means error in a result. For example, error is taken with respect to \(\mathbf{x}_c\) while residual with taken with respect to \(f(\mathbf{x})\). The intuitive understanding of this algorithm is in that, majority of the training points are non-corrupted and poisoned data points typically exhibit larger outlier behavior. If poisoned data points do not have outlier behavior, then its effect on regression task is minimized and hence can be considered as less harmful. The TRIM algorithm actually provides a solution to the following optimization problem:

The optimization problem above intuitively represents that we optimize the regression parameter \(\theta\) and the subset of points with smallest residuals at the same time. This problem is computionally challenging as a simple approach of enumarating all possible subsets of \(I\) of size \(n\) is large. Also, the parameter \(theta\) is typically unknown without any prior assumptions (if we know \(\theta\) in advance, we can simply choose \(I\) as a set of \(n\) points with lowest residuals with respect to \(theta\)).

The solution to the challenge above is to alternatively optimize parameter \(\theta\) and \(I\). Specifically, at the begining of iteration \(i\), an estimation of parameter \(\theta\) is obtained based on the current set \(I\). Next, with the selected parameter \(\theta\), the set \(I\) is updated by selecting \(n\) points with lowest residuals with respect to \(\theta\). As for side note, the first iteration, the set \(I\) is initialized with random set of size \(n\). \(theta\) is updated through some initialization technique or prior knowledge.

The theorem 1 in the paper proves that the TRIM terminates in finite number of steps, and hence convergence of the algorithm is guaranteed. The following theorem (theorem 2 in the paper) provides an upper bound on the worst case loss of the regression model under adversarial noise injection.

The left hand side means the loss on clean data (test or validation dataset) with respect to parameters trained on noisy data is upper bounded by fraction \(1 + \frac{\alpha}{1-\alpha}\) with respect to best case training loss on clean data \(D_{tr}\) and parameter \(\theta^{*}\) is obtained by training on the clean dataset. Hence, right hand side actually denotes a ideal-case scenario. Note that, an implicit assumption here is \(D^{''}\) and \(D_{tr}\) follow same distribution. Also, note that \(alpha\) is the fraction of poisoned data points in the training set, which is typically very small.

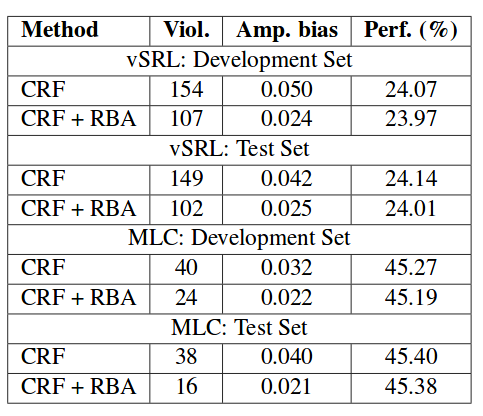

Experimental Results

In this paper, the author used Health care dataset, Loan Dataset and House pricing dataset, which all are well-suited for regression tasks. The results in this blog only contains results for ridge regression tasks. As shown in the figure below (Fig3 in the paper), the optimization based attack method and statistical attack method proposed in this paper outperform the baseline gradient desend (BGD) method significantly. (BGD is a method adapted from previous attack method on classification task. There are no existing methods on attacking regression models).

As for the defense method, following figure illustrates its effectiveness in face of the attacks described in previous sections. It is obvious that TRIM algorithm (defense method proposed in this paper) outperforms other defense methods significantly. Three other defense methods are from existing works that are suitable for countering the attacks proposed in this paper (RANSAC and Huber from robust statistics and RONI implemented by authors based on this and this).

Conclusion

As a concluding mark, this paper is the first paper to consider training data poisoning attacks on linear regression models. Both attack and defense methods for the linear regression task are provided with theoretical guarantees on the convergence and optimality of their attack and defense algorithms.

ANTIDOTE

Benjamin I. P. Rubinstein, Blaine Nelson, Ling Huang, Anthony D. Joseph, Shing-hon Lau, Satish Rao, Nina Taft, and J. D. Tygar. “Antidote: understanding and defending against poisoning of anomaly detectors.” Proceedings of the 9th ACM SIGCOMM Conference on Internet Measurement, 2009. [PDF]

Background of Traffic Matrix and PCA-based Defense

To uncover anomalies, many network anomography detection techniques mine the network-wide traffic matrix, which describes the traffic volume between all pairs of Points-of-Presence (PoP) in a backbone network and contains the collected traffic volume time series for each origin-destination (OD) flow.

There is a network with \(N\) links and \(F\) OD flows and measure traffic on this network over \(T\) time intervals. The relationship between link traffic and OD flow traffic is concisely captured in the routing matrix \(A\). This matrix is an \(N \times F\) matrix such that \(A_{ij} = 1\) if OD flow \(j\) passes over link \(i\), and is 0 otherwise. If \(X\) is the \(T \times F\) traffic matrix (TM) containing the time-series of all OD flows, and if \(Y\) is the \(T \times N\) link TM containing the time-series of all links, then \(Y = XA\). We denote the \(t\)th row of \(Y\) as \(y(t) = Yt\), which is the vector of \(N\) link traffic measurements at time \(t\), and the original traffic along a source link, \(S\) by \(yS(t)\).

Besides, the PCA defense method is inferring this normal traffic subspace using PCA, which finds the principal traffic components, makes it easier to identify volume anomalies in the remaining abnormal subspace.

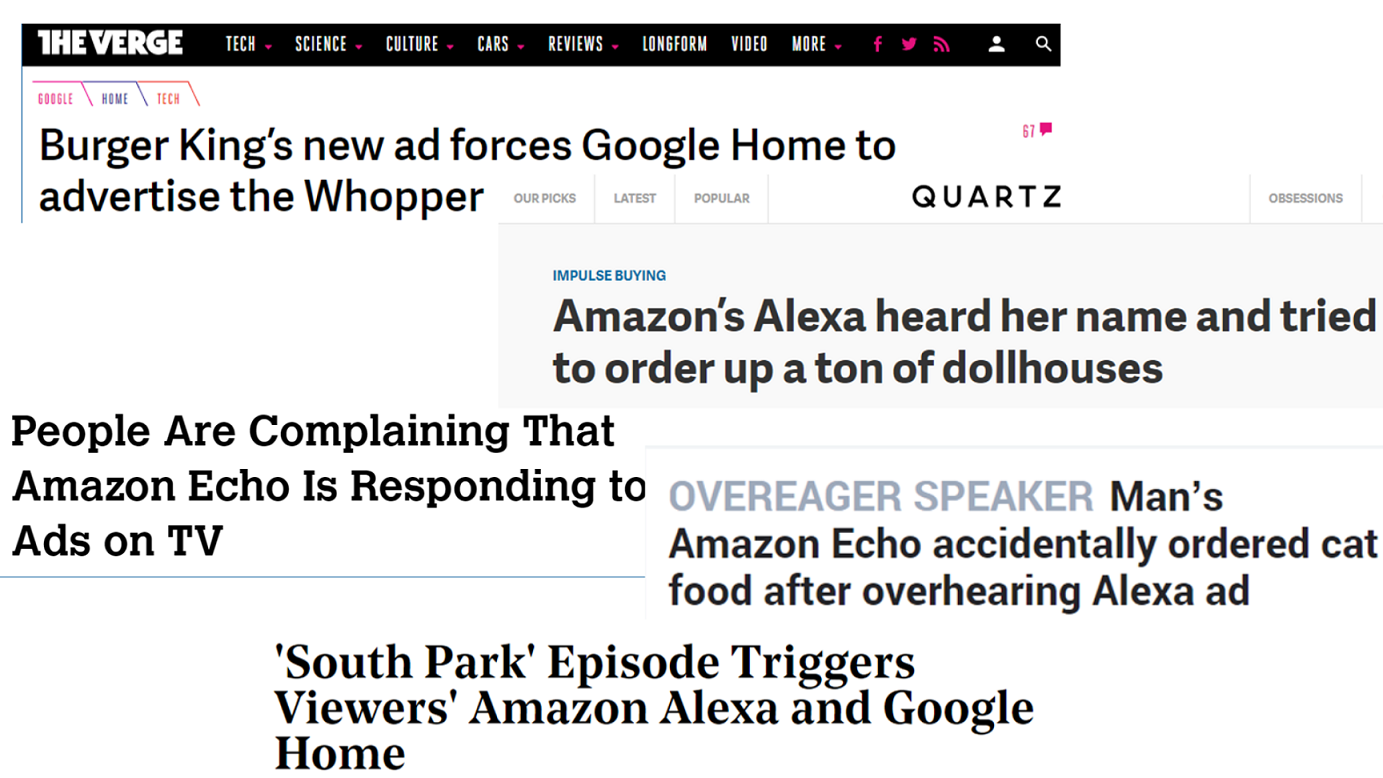

This paper’s topic is to poisoning Principal Component Analysis anomaly detectors by poisoning the training data the detector uses (observed normal network activity), like adding additional traffic and noise to regular network traffic, to achieve a higher false negative rate.

Poisoning Strategies

Threat Model

The adversary’s goal is to launch a Denial of Service (DoS) attack on some victim and to have the attack traffic successfully cross an ISP’s network without being detected. Before launching a DoS attack, the attacker poisons the detector for a period of time, by injecting additional traffic, chaff, along the OD flow that he eventually intends to attack. For a poisoning strategy, the attacker needs to decide how much chaff to add, and when to do so. These choices are guided by the amount of information available to the attacker. The weakest attacker is one that knows nothing about the traffic at the ingress PoP, and adds chaff randomly. An intermediate case is when the attacker is partially informed. Here the attacker knows the current volume of traffic on the ingress link(s) that he intends to inject chaff on, which is locally-informed attack. An attack can also be globally-informed when the attacker’s global view over the network enables him to know the traffic levels on all network links. Moreover, we assume this attacker has knowledge of future traffic link levels.

Uninformed Chaff Selection

At each time t, the adversary decides whether or not to inject chaff according to a Bernoulli random variable. If he decides to inject chaff, the amount of chaff added is of size θ, for example, ct = θ.

Locally-Informed Chaff Selection

The attacker knows the volume of traffic in the ingress link he controls, Ys(t). Hence, this scheme elects to only add chaff when the existing traffic is already reasonably large. In particular, we add chaff when the traffic volume on the link exceeds a parameter α (we typically use the mean). The amount of chaff added is ct = (max {0, yS(t) − α}})θ.

Globally-Informed

The attacker is an omnipotent adversary with knowledge of past, present, and future network traffic. The attacker selects a link to poison and amount of chaff to add by solving an optimization problem. The optimization problem is as follows:

In this optimization problem, as we introduced before, Y contains time series of all links; A is the rounting matrix; C is the amount of chaff; $$ \widetilde{y_t} $$ is the link volume in future time t; μ is mean traffic vector; θ is a constant constraining total chaff.

ANTIDOTE: A ROBUST DEFENSE

This paper propose an approach searches for directions that maximize a robust scale estimate of the data projection to make PCA robust. Together with a new robust Laplace threshold, they form a new network-wide traffic anomaly detection method, Antidote. To mitigate the effect of poisoning attacks, this paper needs a learning algorithm that is stable in spite of data contamination. That learning algorithm can be robust PCA since robust is the formal term used to qualify this notion of stability.

The aim of a robust PCA is to construct a low dimensional subspace that captures most of the data’s dispersion and is stable under data contamination. The robust PCA algorithms we considered search for a unit direction v whose projections maximize some univariate dispersion measure S(·); that is

The standard deviation is the dispersion measure used by PCA.

Unlike the eigenvector solutions that arise in PCA, there is generally no efficiently computable solution for robust dispersion measures and so these must be approximated. So this paper proposed PCA-GRID, which is a successful method for approximating robust PCA subspaces.

To better understand the efficacy of a robust PCA algorithm, this paper demonstrates the effect our poisoning techniques have on the PCA algorithm and contrast them with the effect on the PCA-GRID algorithm.

PCA-GRID

Here the data has been projected into the 2D space spanned by the 1st principal component and the direction of the attack flow. The effect on the 1st principal components of PCA and PCA-GRID is shown under a globally informed attack (represented by points).

Robust Laplace Threshold

Instead of the normal distribution assumed by the Q-statistic, this paper use the quantiles of a Laplace distribution specified by a location parameter c and a scale parameter b. Critically, though, instead of using the mean and standard deviation, this paper robustly fit the distribution’s parameters. Then, they estimate c and b from the residuals ya(t)2 using robust consistent estimates of location (median) and scale (MAD).

where \(P^{−1}(q)\) is the qth quantile of the standard Laplace distribution. The Laplace quantile function has the form ((P^{−1}_{c,b}(q) = c+b·k(q)\) for some k(q).

Methodology

They first collected data over a 6 month period, consisting of measurements across network flows. They wanted to evaluate the how good ANTIDOTE is in the face of poisoning and DoS attacks. They used two weeks of data for this, the first for testing and second for training. The poisoning occurs during the training phase, and the attack occurs during the test week. They also had a second method where training and poisoning occurred over multiple weeks, called the Boiling Frog method. They determined success by the false negative rate (FNR), or the number of successful evasions to attacks.

To compute FNRs, they generated anomalies by the Lakhina et al. method and injected into collected data. Data is binned in 5 minute windows, and they make a decision at the end of each 5 minute window whether or not there was an attack. In terms of FPRs, they generate negative examples, fit the data to a model. Selected points in the data that differ from the model by a small amount are “benign.” If the detectors raise an alarm for these points, we have a false positive. They also carried out the Boiling Frog method which has a complicated mathematical procedure and can be understood further by reading the paper.

Effectiveness of Poisoning

During the testing phase, a DoS attack was launched during the 5 minute windows of the single training experiment. The graph below indicates evasion is smallest with the uninformed strategy, intermediate for the locally-informed strategy, and largest for the globally-informed strategy. This makes sense since naturally the globally-informed attacker would know more than the others.

For the multi-training poisoning (boiling frog), the schedules were varied with increasing growth rates from week to week. The evasion graph is shown below. The three slower schedules have a small rejection rate. The 15% schedule has a higher rejection rate, but after a month of injected poison data, the rates drop off.

How well does ANTIDOTE perform in the face of attacks?

We can see that the evasion success of the attack is dramatically reduced with ANTIDOTE in the figure below if we compare it to the evasion graph for single training poisoning in the previous section. The evasion success is cut in half. The most effective poisoning scheme on PCA was globally informed, but with their solution was the most ineffective scheme.

With the boiling frog strategy, we can see the results in the graph below. For the stealthiest poisoning (1.01 and 1.02), antidote is most effective in reducing evasion success. Also, under PCA the evasion increased with time. However, with ANTIDOTE, evasion success starts to drop off after some time.

References

[1] Matthew Jagielski, Alina Oprea, Battista Biggio, Chang Liu, Cristina Nita-Rotaru and Bo Li. Manipulating Machine Learning: Poisoning Attacks and Countermeasures for Regression Learning. April 2018.

[2] Xiao, Huang, et al. “Is feature selection secure against training data poisoning?". International Conference on Machine Learning. 2015.

[3] Fischler, Martin A., and Robert C. Bolles. “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography.” Readings in computer vision. 1987. 726-740.

[4] Huber, Peter J. “Robust estimation of a location parameter.” The annals of mathematical statistics (1964): 73-101.

[5] Chen, Yudong, Constantine Caramanis, and Shie Mannor. “Robust sparse regression under adversarial corruption.” International Conference on Machine Learning. 2013.

[6] Nelson, Blaine, et al. “Exploiting Machine Learning to Subvert Your Spam Filter.” LEET 8 (2008): 1-9.

[7] Ali Shafahi, W. Ronny Huang, Mahyar Najibi, Octavian Suciu, Christoph Studer, Tudor Dumitras, and Tom Goldstein. “Poison Frogs! Targeted Clean-Label Poisoning Attacks on Neural Networks.” April 2018.

[8] Szegedy, Christian et. al. “Rethinking the inception architecture for computer vision.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016): 2818–2826.

[9] Rubinstein, Benjamin IP, et al. “Antidote: understanding and defending against poisoning of anomaly detectors.” Proceedings of the 9th ACM SIGCOMM conference on Internet measurement. ACM, 2009.

Class 10: Formal Verification Methods

Motivation

Similar to what we saw in Class 6, we would like to have formal bounds on how robust a machine learning model under attack. The following two papers aim at achieving this robustness by means of proving properties about the underlying neural networks. This strategy aims to end the arms race of attacks and defenses commonly seen in literature, and to provide formal guarantees of defenses with respect to any type of adversary.

Differential Privacy and Adversarial Robustness

Mathias Lecuyer, Vaggelis Atlidakis, Roxana Geambasu, Daniel Hsu, Suman Jana. On the Connection between Differential Privacy and Adversarial Robustness in Machine Learning. February 2018. arXiv e-print [PDF]

In the privacy domain, we are able to provide formal guarantees of the privacy of records by introducing the property of differential privacy. Where for two data sets that differ in one record, the difference of the output predictions on each one is less than some bounded amount.

In this paper, the authors extend this property to apply to adversarial examples by relating changes in a data set to changes in an input. Where for two inputs that differ in some perturbation, the difference of the output predictions on each one is less than some bounded amount.

More formally, $$ P(A(x) = i) \le e^{\epsilon} P(A(x’) = i) + \delta $$ Where, \( x’ \) is some perturbed version of \( x \). \( A \) is the algorithm in question, and \( P(A(x) = i) \) is the probability that A assigns to \( x \) taking on value \( i \).

The authors apply this property to images and call it PixelDP. They go on to prove that if a model satisfies PixelDP with respect to the 0-norm metric for a set of inputs, then it is robust to 0-norm attacks on those inputs. (0-norm here indicating how many pixels were changed).

They accomplish this by also establishing an upper bound on the second highest class assignment for the perturbation, \( \max_{j \ne i} p_j(x’) \), using the same principle of robustness as defined above:

$$ P(A(x’) = j) \le e^{\epsilon}P(A(x) = j) + \delta $$

From these two bounds, they arrive at the core proposition that an algorithm satisfies PixelDP robustness to 0-norm attacks if the following holds:

$$ p_{i}(x) > e^{2 \epsilon} \max_{j: j \ne i} p_{j}(x)+ (1+e^{\epsilon}) \delta $$

(sorry, can’t get this to typeset with mathjax).

With this proposition the authors devise a method for attaining this property, primarily by adding and accounting for noise during training and testing.

Noise Layer

The authors’ basic approach is to add a noise layer to the neural network. They first establish that the noise added should be proportional to the sensitivity of the layer, which is more difficult to compute for deeper layers. They recommend placing the noise in the first few layers of the network as it is easiest to bound the sensitivity, and acknowledge that trade-offs of accuracy and robustness play a role in the decision process.

The noise layer itself simply uses Laplacian and Gaussian mechanisms to add noise, and the network is trained with the noise layer to allow decent accuracy.

Testing Strategy

To account for the noise during testing, the authors run the input through the network multiple times. The label is the highest predicted score is chosen and these results are counted and aggregated. This allows the variance of each label to also be computed.

The idea of making multiple queries may seem contradictory to the idea of privacy, where each query takes away from a privacy budget. However, here we are not concerned with privacy leakage here, only with bounding the output of the function.

Robustness

Finally, to have a measure of robustness, the authors propose a way deriving a bound for the maximum perturbation allowed. This is accomplished by finding the maximum \( L \) such that the proposition defined above still holds. The resulting \(L\)max can be compared against some threshold \( T \) such that if \( L_{max} \ge T \) then the model is robust against that input. That is to say, that perturbation to the given image would not be sufficient to change its label.

This metric is, of course, dependent on a given test set, but it allows the claim that a certain portion of the test set is “safe”, meaning the prediction is robust for that test set. If the test set is representative of the input domain examples that matter, this can give us some confidence (but no guarantee) that the model is robust for other inputs also.

Evaluation

To evaluate their approach, the authors used three accuracy metrics.

- Conventional accuracy: the proportion of correct predictions

- Robust accuracy: the proportion of correct and robust predictions

- Robust precision: the proportion of correct predictions relative to the number of robust predictions.

They first tested accuracy on benign samples with different noise levels, \( L \) and different thresholds for robustness \( T \).

They found that, with higher \( T \), robust accuracy decreases but robust precision increases, and that the threshold should be tuned based on the amount of noise.

They then tested the accuracy on malicious samples, comparing to the Madry defense [3]

This shows that for a 2-norm attack, their defense is comparable to the Madry defense, but for an inf-norm attack, the Madry defense is better. These results make sense because the Madry defense was built around \( L\)\(\infty\) attacks, while PixelDP is for \( L\)0 and \( L\)2 attacks. This begs the question of if we can have a differential privacy based defense in the \(L_{\infty} \) space, but at the moment there is no clear mapping between the two.

Piecewise Linear Neural Network Verification

Rudy Bunel, Ilker Turkaslan, Philip H.S. Torr, Pushmeet Kohli, M. Pawan Kumar. Piecewise Linear Neural Network verification: A comparative study. arXiv e-print November 2017. [PDF]

In this paper, the authors look at providing guarantees for piecewise linear neural networks for the purposes of verification. They show how to approach this through several approaches. For all of these approaches, the problem is defined the same general way. We are given a network that implements a function \( y = f(x) \) and some bounded input domain \( C \) in order to prove some property \( P \) about the network. This can be formulated as shown below: $$ x \in C, y = f(x) \implies P(y) $$

In this problem definition, we see that we are only considering piecewise-linear neural networks (PL-NNs). This is justified by the observation that PL-NNs represent the majority of networks used in practice. In addition, the properties mentioned in the problem statement are defined to be Boolean formulas over linear inequalities.

Verification Methods

The authors then go on to discuss a couple different verification methods, which all leverage the piecewise linear structure of PL-NN. All of these methods that are compared follow the same general principle: discover a counterexample to make the given property false. If this counterexample problem is unsatisfiable, then we have shown that no counterexample exists and hence the property must be true.

Mixed Integer Programing Encoding

In the general problem we are taking a look at, we have an issue with non-linearities as we must deal with a max function on the output of our network. In this method, the authors bypass this by replacing these nonlinear max functions with a set of inequalities. These can then be passed off to the solver to see if we can achieve some counterexample.

Reluplex

This method essentially assigns values to all the variables, even if some of the constraints are violated. It then goes through and tries to fix the violated constraints at each step. Reluplex is covered more extensively in our previous blog post.

Planet

This method operates by attempting to find an assignment to the phase of the non-linearities. Similar to Reluplex, it assigns values to the variables and then at each step verifies the feasibility of the assignment. Unlike Reluplex, this also has the advantage of being easily extended to networks containing MaxPooling units. In order to detect incoherent assignments, the author also employs a global linear approximation to the neural network. This is done by approximating the nonlinear constraints as a set of linear constraints that represent the convex hull of the nonlinearities.

Branch and Bound Optimization for Verification

This method looks at transforming this whole satisfiability problem into an optimization problem. To approach this, we look at adding extra neurons to the end of the network such that we can take a look at the global minimum and use that information to determine whether the original problem was satisfiable. Essentially, we are formulating any Boolean formula over linear inequalities on the output of the network as a sequence of additional layers, and thus reducing the verification problem to a global minimization problem.

Optimization algorithms, such as stochastic gradient descent, are not appropriate for this minimization problem as they do not provide the guarantee of a global minimum. The authors thus propose a branch and bound algorithm. This algorithm essentially is a breadth-first search algorithm. The algorithm computes the upper and lower bounds of the minimum of the output. The best upper-bound found so far will serve as a candidate for the global minimum. The iterative splitting process of the BFS type search will allow us to get a tighter and tighter lower bound.

Evaluation

For the evaluation, they consider a couple of data sets: Airborne Collision Avoidance System, Collision Detection, and Twin Stream. For each of these, they attempt to solve the satisfiability problem with a timeout of two hours. They define their success rate to be the proportion of properties successfully solved. A problem determined as SAT means that the property was false and that a counterexample exists and hence, UNSAT means that the property is true. Another metric used is labeled “Number of Wins.” This counts the number of times a solver was the fastest to solve a property. The results for these data sets are shown below:

Overall, we can see that the proposed branch and bound method is able to compete with other verification methods present in literature and so this proposes a new strategy for formal verification techniques.

Suman’s Talk

For the last section of class, Suman Jana from Columbia University gave us a talk on his ongoing research. He also discussed some of the core motivations for why it is important and difficult to study this area. Citing the differences between testing and verifying regular programs, which can have formal specifications and SMT solvers. In machine learning, the idea is to learn the specification, and they are fundamentally much more opaque.

— Team Bus:

Anant Kharkar,

Atallah Hezbor,

Bhuvanesh Murali,

Mainuddin Jonas,

Weilin Xu

References

[1] M. Lecuyer, V. Atlidakis, R. Geambasu, D. Hsu, S. Jana. “On the Connection between Differential Privacy and Adversarial Robustness in Machine Learning” arXiv preprint arXiv:1802.03471, February 2018.

[2] R. Bunel, I. Turkaslan, P-H.S. Torr, P. Kohli, M.-P, Kuymar. “Piecewise Linear Neural Network verification: A comparative study” arXiv preprint arXiv:1711.00455, November 2017.

[3] A. Madry, A. Makelov, L. Schmidt, D. Tsipras, A. Vladu. “Towards Deep Learning Models Resistant to Adversarial Attacks” arXiv preprint arXiv:1706.06083, June 2017.

Class 9: Adversarial Malware Detection

Evolution of the Malware Arms Race

Babak Bashari Rad, Maslin Masrom, and Suhaimi Ibrahim. Evolution of Computer Virus Concealment and Anti-Virus Techniques: A Short Survey. University Technology Malaysia. 6 April 2011. [PDF]

Since the first appearances of early malware, advances in both combating and creating viruses have analogously mirrored the general patterns of the medical battle against evolving biological virus outbreaks — as anti-virus software continually develops innovative techniques for detecting existing viruses, virus writers seek out new methods to cheat those detection systems. To understand the present state of the field and the role of machine learning in combating malware, let’s look at a brief history of how both the attacks and defenses have evolved in the malware domain.

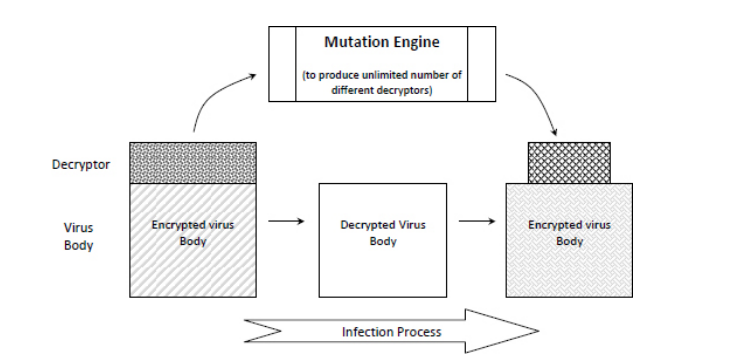

Encrypted Viruses

At its most basic, a virus is simply a program that is designed to alter the way a computer operates and spread from one computer to another. However, to be truly effective, a virus must also conceal itself so as to replicate and infect another computer. Thus, the most primitive approach to covering the operation of the virus code, first appearing in 1988, uses encryption to change the virus body binary codes with encryption algorithms in order to make it more difficult to analyze and detect.

Encrypted viruses are typically made up of two parts: the encrypted body of the virus itself, and a small (unencrypted) segment of decryption code. When the infected program runs, the decryption loop executes, which first decrypts the encrypted virus code, and then moves the program control to the body of the virus code:

Encryption has utility for a virus writer in several ways; most importantly, it disguises suspicious code in order to avoid detection by static code analysis, which automatically analyzes code and generates a warning were the code simply in unencrypted plaintext. Additionally, encryption of a virus also prevents tampering - since new viruses could accidentally produced with minor changes in the original virus code, encryption ensures that an expert is required to dismantle the virus, or else run the risk of producing further harm by messing with it. Finally, an encrypted virus cannot be detected by simple string matching prior to decryption, because only the decryptor loop is identical in all variants of the virus. Thus, in an anti-virus inspection, which attempts to match the signature for an encrypted virus, the signature string is constrained and must be selected precisely.

Morphic Viruses

The constant decryptor loops turned out to be the downfall of static encrypted viruses, however; since the decryptor loop code segment remained constant in new infected files, anti-virus software had no trouble obtaining a signature string with which to seek matches in code during an inspection. To overcome this vulnerability, virus writers employed techniques to mutate the decryptor body, giving rise to a new type of concealment viruses: oligomorphic viruses.

Rather than passing on the same exact decryptor loop code for each instance of the virus, oligomorphic viruses are willing to substitute the decryptor code in new offspring of the virus when reproducing themselves. This idea is most easily implemented by providing a set of different decryptor loops rather than one, which make the detection process more difficult for signature-based scanning engines.

In fact, the most common approach in anti-virus tools is signature scanning, which uses small strings, “signatures” (the results of manual analysis of viral codes) to identify viruses and malware. The signature is meant to discover viruses in the cases of a match, thus, it is vital to ensure that any given virus signature only maps to a specific virus, rather than mapping to say, other viruses, or worse, benign programs, in order to avoid flagging non-virus code.

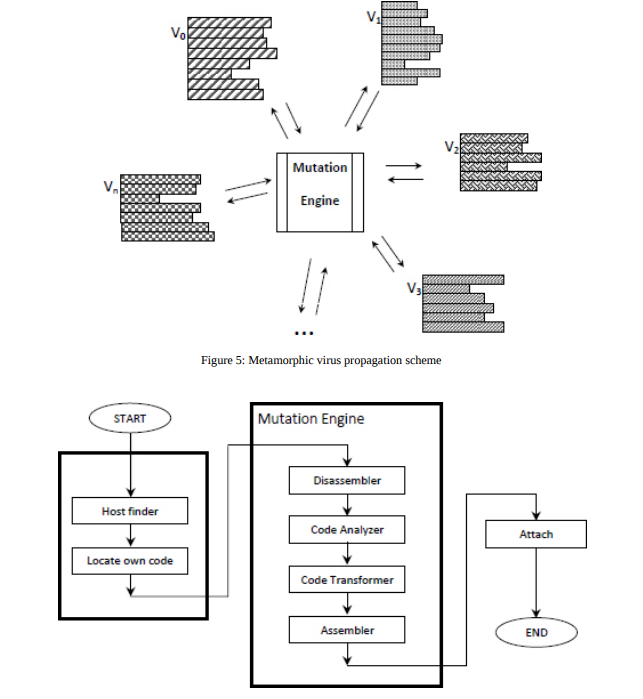

To cheat signature scanning, polymorphic viruses actually take oligomorphic viruses one step further, exploiting mutation algorithms to create a potentially infinite number of variations of decryptors in the next generation - when the virus replicates to infect a new host, it mutates its own decryptor loop to create a new permutation to pass on to the next generation. Thus, there is no consistent signature pattern from host to host that could be detected by signature scanning.

Despite these clever evasion techniques, in all the previous viruses described, once the virus body is decrypted, it is easily detected by signature scanning in its plaintext form. Polymorphic viruses, however, also dubbed “body-polymorphics”, do not even require encryption to avoid detection - instead, they mutate their entire body, rather than just their decryption loop, using the same decryptor loop-generation techniques used by polymorphic viruses to produce new instances of the virus.

Machine Learning in Malware

Konrad Rieck, Thorsten Holz, Carsten Willems, Patrick Düssel, Pavel Laskov. Learning and Classification of Malware Behavior. 15th USENIX Security Symposium. 23 April 2008. [PDF]

When an unknown malware instance is discovered, there are two important questions to be asked:

-

Does the new malware instance belong to a known malware family, or does it constitute a novel malware strain?

-

What behavioral features are discriminative for distinguishing instances of a given malware family from others?

To approach automating the discovery of the answers to this question, Rieck et al. train a classifier on honeypots and spam-traps to map unknown viruses to malware families, or to a new classification altogether, and to uncover properties that characterize families of viruses.

First, the behavior of each binary is monitored in sandbox envrionment, and behavior-based analysis reports summarizing operations. Then, the learning algorithm embeds the generated analysis reports in a high-dimensional vector space and learns a discriminative model for each malware family - that is, creates a model to predict whether this instance belongs to a known family or not, which is then aggregated against other similar models to produce a final decision. This process answers the first question.

Further, to understand and evaluate the importance of specific features for malware behavior classification, sort the weights of behavioral patterns in the learning model and consider the most prominent patterns to obtain characteristic features of each malware family.

PDF Malware Classifiers

Charles Smutz and Angelos Stavrou. Malicious PDF Detection using Metadata and Structural Features. ACSAC 2012. [PDF]

Portable Document Format (PDF)

The Portable Document Format (PDF) file structure consists of four parts: header, body, cross-reference table (CRT), and trailer. The following figures show an example PDF file, the raw content of an example PDF file, and the corresponding structural tree of the PDF file, respectively.

PDF documents have become a prevalent target of either massive or one-on-one attacks due to their wide use and diverse functionality. Popular PDF malware detectors include SL2013, Hidost, PDFRate and its variations. Among them, SL2013 and Hidost are structure-based PDF classifiers while PDFRate is content-based.

PDFRate

PDFRate, a real learning-based system introduced by George Mason University scholars Smutz and Stavrou, uses metadata and structural features to classify PDF files as benign or malicious based on the Random Forest algorithm. The feature space of PDFRate contains 202 integer, floating point and Boolean features selected from PDF metadata and file contents. Two strategies were employed during the feature selection phase, including avoiding reliance on specific byte sequence and not targeting specific known vulnerabilities. Moreover, the significant interdependence nature of the features makes the adversary’s life difficult when they attempt to control feature values. Datasets Contagio, Operational, and Community have ever been adopted in the training and evaluation of PDFRate. With respect to the classification algorithm, the number of trees is 1000 and each tree carries 43 features.

The following are the most important features for classification out of a total of 202 features: count_font count_javascript count_js count_stream_diff pos_box_max image_totalpx producer_len

Adversarial Analysis

Using PDFRate, the classification rates achieve well above 99% true positive rates while maintaining 0.2% or less false positive rates for different datasets, classification parameters and experimental conditions.

Mimicry attack, in which malicious documents are artificially modified to make some of the features similar to benign ones while retaining the malicious part, is one conceivable evasion technique. The key part the attacker want to make use of in order to spoof the defender is to mimicking the most important features for classification.

In the mimicry attack effectiveness test where the previously extracted features are purposefully modified, the six most important features were selected for evasion testing. As shown in the table below, the conclusion is that the classification error can increase to a great extent.

When the top features ranked by random forests are removed, the table below demonstrates the increase in classification error but the effect is surprisingly low. The reason is that there are many other important features retained.

The table below shows the testing results of the effectiveness of perturbation. The goal of using perturbation, i.e. increasing the feature variance by modifying the features of a malicious subset in the training set, is to reduce the importance of these features without fully negating them in order to counter evasion.

Since this approach is behavioral-based rather than signature-based, it can both identify and capture the most important behaviors of similar malware, allowing for detection that does not rely on the exact signature-matching, but rather on automated learning of behavioral patterns. This provides evidence to the claim that machine learning could provide innovative avenues into the malware field.

The findings of this study that the ensemble classifier is able to offer robust detection to counter mimicry attack even when the top features are exploited by the attacks is exciting. The classifier proves to be robust and resilient against mimicry attacks. Future directions to explore include applying the detection and classification techniques to other document types, studying the suitability of group malicious documents using the features for classification, combining other features, and performance comparative study with other techniques.

Hidost: A Static Machine-Learning-Based Detector of Malicious Files

Nedim Šrndić and Pavel Laskov. Hidost: a static machine-learning-based detector of malicious files. EURASIP Journal on Information Security, 2016. [PDF] (earlier version in NDSS 2013: [PDF])

There has been a substantial amount of work on the detection of no-executable malware which includes static, dynamic and combined methods. Although static methods perform in orders of magnitude faster, their applicability has been limited to only specific file formats. Hidost introduces the static machine-learning-based malware detection system to operate multiple file formats like pdf or swf having hierarchical document structure.

Hierarchically structured file formats

File formats are developed as a mean to store the physical representation of certain information but all of them do not have logical structure. For example, some formats like text files do not have any logical structure but others e.g.; HTML files represents a logical relationships between html elements. The following figure shows the hierarchical structure of the swf file. The pdf file structure has been discussed in the above section.

Distinguishing benign from malicious files

In a pdf structural tree, a path is defined as a sequence of edges starting in the Catalog dictionary and ending with an object of primitive type. For example, as we see in the above figure of pdf structural tree, there s a path from the root path from the root, i.e., leftmost, node through the edges named /Pages and /Count to the terminal node with the value 2. This definition of a path in the PDF document structure, which is denoted as PDF structural path, plays a central role in Hidost approach. Paths are printed as a sequence of all edge labels encountered during path traversal starting from the root node and ending in the leaf node. The path from our earlier example would be printed as /Pages/Count.

Following is a list of example structural paths of real world benign files: /Metadata /Type /Pages/Kids /OpenAction/Contents /StructTreeRoot/RoleMap /Pages/Kids/Contents/Length /OpenAction/D/Resources/ProcSet /OpenAction/D /Pages/Count /PageLayout

Presence of these structural paths in a file indicates that it is benign. Alternatively, the absence of these paths is indicative to the fact that the file may be malicious. In addition, malicious files are not likely to contain metadata in order to minimize file size, they do not jump to a page in the document when it is opened and are not well-formed so they are missing paths such as /Type and /Pages/Count. The following is a list of structural paths from real-world malicious PDF files: /AcroForm/XFA /Names/JavaScript /Names/EmbeddedFiles /Names/JavaScript/Names /Pages/Kids/Type /StructTreeRoot /OpenAction/Type /OpenAction/S /OpenAction/JS /OpenAction

System Design

The system design of Hidost consists of six stages: structure extraction, structural path consolidation, feature selection, vectorization, learning, and classification as it is illustrated in the following figure.

File structure extraction

In this stage, files are transformed into more abstract representation (logical structure) i.e.; into a structural multimap. Multimap is basically a map with associations between every structural path to the set of all leaves that lie on a given path. The concept is similar to map but you have multiple leafs as we see in path mediabox in the following figure.

Structural path consolidation

There may be cases in which semantically equivalent, but syntactically different structures can avoid detection. In Hidost, heuristic technique to consolidate structural paths to reduce polymorphic paths. For example, /Pages/Kids/Resources and /Pages/Kids/Kids/Resources can be reduced to /Pages/Resources.

Feature Selection

Feature Selection is used to limit the rare features once again similar to many Machine Learning techniques.

Vectorization

In the vectorization stage, structural multimaps are first replaced by structural maps—ordinary map data structures that map a structural path to a corresponding single numeric value. To this end, every set of values corresponding to one structural path in the multimap is reduced to its median.

Learning and Classification

The authors have used Random Forest implementation at this stage. But, it can be any classifier as per reader’s choice.

Experimental evaluation

The authors run their experiment on two datasets, one for PDF and one for SWF file formats. Both of the datasets were collected from VirusTotal. A file is considered as malicious if it is labeled as malicious by at least five engines. Alternatively, a file is labeled benign by all antivirus engines. Following figure shows that HiDost performs better that the antivirus engines in the VirusTotal. It should be mentioned that VirusTotal may not contain the most efficient antivirus developed by the proprietor company.

Machine Learning on Malware is Hard

While machine learning algorithms have been successfully applied in various vision and natural language related tasks, they have not been fully successful in malware detection. The reasons are mainly three-fold:

High Cost of Error

In the case of malware detection, when measuring the performance of a classifier, both false positive rate and false negative rate are important factors. While the false positive rate means a benign software is classified as a malware, in the false negative case a malware is classified as benign. Both the events are undesirable for malware detection. Since, false positive requires excessive spending of a human analyst’s time which could be expensive and time consuming. On the other hand, false negative means a malware is left undetected, which can have serious security consequences. A typical machine learning model trained for the malware detection task tends to have non-zero false positive rates and false negative rates.

The above figure shows the example performance of a typical malware classifier (B) which has non-zero false positive rate, whereas an ideal classifier (A) would have zero false positive rate.

Semantic Gap

There is a disconnect between the prediction result of a machine learning model and the action to be taken based on the result. For instance, if a model predicts a malware with 65% confidence, what action should be taken? Should the target software be removed without intimation to the end user? Should the user be alerted for a manual inspection? In such a scenario, it is hard to interpret the results and take a meaningful action.

Difficulty with Evaluation

One of the major difficulty in the evaluation of malware detection tool is the availability of datasets. Most of the public datasets like Contagio or Drebin are small or outdated, whereas the malwares keep updating aggressively. It is difficult to create new rich malware datasets due to data privacy concerns and the labelling of malwares in the wild. This poses problem for training and evaluating the machine learning models.

Another difficulty is the evasiveness of the malwares. Malwares have become dynamic enough to evade the malware classifiers. Given a white-box access to the classifier, malware can perform adversarial training like gradient-based method to evade detection. Even with black-box access, the malware can perform mimicry attack by appending features of benign samples. Figure below shows an example of mimicry attack. The file on the right is a benign file and the file on the left is a malicious file. By appending some features from benign file to malicious file, the classifier can be fooled into accepting the malicious file as benign.

However, this may or may not break the malware’s functionality and hence it is hard to generate useful samples. One variant is to use reverse-mimicry attack by embedding malicious features into benign file to generate malicious samples as shown in the figure below.

Recent literature has also shown evasion by genetic mutation and hill climbing method, where the malware can dynamically adapt to evade detection.

In genetic mutation attack, adversarial malware samples are generated by repeated operations on the malware sample until it is accepted as a benign sample by the classifier. The operations are addition, deletion or replacement of benign file features to the malicious file. The process only requires a binary output by the classifier whether the input was classified as malicious or not.

In hill climbing method, malicious file is taken as input and all possible recursive variants are tried until the malicious file is accepted as benign by the classifier while the malicious functionality is retained by the file. In figure below, the state e is the end state where the process has found the required adversarial sample, and the process terminates.

Both the genetic mutation and hill climbing methods are shown to fool PDF Rate and Hidost malware classifiers.

State of the Art

Liang Tong, Bo Li, Chen Hajaj, Chaowei Xiao, Yevgeniy Vorobeychik, “Hardening Classifiers against Evasion: the Good, the Bad, and the Ugly.” arXiv:1708.08327. August 2017. [PDF] [4]

Anti-malware defenders today attempt to passively analyze files of a specific type across the web and flag potential malware. These models are often trained offline. The counterparts to these software work much more actively to produce mis-classified malware. These attackers work against black box or often black-world (no response) classifiers.

Behavior Preservation

Malware behavior preservation is difficult due to the nature of code; most changes risk breaking the code entirely. This means that random mutation processes need to be heavily limited to preserve the behavior of the code. Mutation processes are often either trivial or not done at all. Very few papers allow mutations and verify the process afterward, as it requires dynamic analysis in a sandbox.

For this reason, malware development turns often to genetic development algorithms for finding evasive samples. The nature of these changes is more likely to preserve the behavior of the malware whilst creating a new sample that may be more evasive.

EvadeML

Malware development in search of evasive samples is often done using genetic algorithms mostly using greedy searches. EvadeML is one such development process to create evasive PDF malwares targeting Hidost and PDFrate. The software has a 100% success rate within 20 generations and works by mutating the raw PDFs.

The below graph plots the number of evasive specimen in a population of 500 malicious seeds against the generation using the above evolutionary method. As is readily apparent, evasive malicious samples are not only easy to produce using a small number of generations, but are also numerous. This calls into question the value of the classifiers that we are using to identify malicious code.

EvadeML is also working on a project that would ideally limit the featurespace, thus enabling better targetted classifiers. This is done via a process called feature squeezing. This process is primarily important in the filter sections displayed below, as the featurespace is limited to fewer variables to be passed through a model.

For each filter, a number of new features are selected as some function of the original feature set. This works similarly to the first layer of a neural network, except that there is more human involvement, other functions can be and are used, and that once the filter function has been created, it in unchanged during the model training process. These filters can be built from rounding/cutoff functions, input smoothing, simplifying color bit depth, and other functions that a neural net is generally incapable of creating naturally. The goal of these is to complement defensive methods and work directly to limit the potential of adversarial training.

Deep Learning

There’s a slight disconnect between security researchers and pure ML researchers, particularly those that are doing research into deep learning. The deep learning architectures that are being researched for malware deterction are multi-layer perception on preselected features and convolutional networks, but there are very few recurrent models.

Detection softwares are working to identify dynamic features more specifically in malware, as any static features of the code aren’t being triggered at runtime. This shift minimizes the attackers’ ability to mutate their code while maintaining functionality. This does, however, enable ‘time-bomb’ style malware, where certain malicious features are static until a certain datetime.

Future of the Field

While progress is being made in the field, there are a number of challenges that need to be met for malware detection and evasion to evolve. Much work has been done in the area of both static analysis and the mutation of static features. However, it’s clear that static analysis isn’t good enough for today’s tasks, and that effective detectors and mutators need to embrace dynamic features.

The more challenging goal of using a learning model to algorithmically mutate dynamic features that preserve the functionality of original code is a more complex problem. While achieving effective dynamic feature mutation isn’t currently feasible with today’s research, a step in the right direction will certainly involve selecting an appropriate deep learning architecture. Perhaps recurrent neural networks, which fit sequence modeling tasks well, could be useful.

Detectors must evolve as well. Research has shown that some dynamic analysis algorithms are detectable, and that sophisticated threats can delay malicious behavior until they are no longer in a sandboxed environment. Effectively learning from sandboxed data streams and system calls will open up a new front in the arms race between detector and attacker.

Ultimately, from simple to metamorphic viruses, malware has evolved into increasingly sophisticated threats. The field of adversarial malware detection has been evolving as well, as methods are developed that are increasingly effective at detecting malicious code. As the cutting edge of malware detection moves forward, we will see new fronts open up in the contest between malicious files and the algorithms that detect them.

— Team Nematode: \

Bargav Jayaraman, Guy “Jack” Verrier, Joshua Holtzman, Max Naylor, Nan Yang, Tanmoy Sen

References

[1] Babak Bashari Rad, Maslin Masrom, and Suhaimi Ibrahim, “Evolution of Computer Virus Concealment and Anti-Virus Techniques: A Short Survey.” IJCSI International Journal of Computer Science Issues, Vol. 8, Issue 1. January 2011

[2] Babak Bashari Rad, Maslin Masrom, and Suhaimi Ibrahim, “Camouflage in Malware: from Encryption to Metamorphism.” IJCSNS International Journal of Computer Science and Network Security, VOL.12 No.8. August 2012.

[3] Konrad Rieck, Thorsten Holz, Carsten Willems, Patrick Düssel, Pavel Laskov, “Learning and Classification of Malware Behavior.” 15th USENIX Security Symposium. 23 April 2008.

[4] Liang Tong, Bo Li, Chen Hajaj, Chaowei Xiao, Yevgeniy Vorobeychik, “Hardening Classifiers against Evasion: the Good, the Bad, and the Ugly.” arXiv:1708.08327. August 2017.

[5] R. Sommer and V. Paxson, “Outside the Closed World: On Using Machine Learning for Network Intrusion Detection.” 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 2010, pp. 305-316. May 2010.

[6] Weilin Xu, Yanjun Qi, and David Evans. “Automatically Evading Classifiers A Case Study on PDF Malware Classifiers.” Network and Distributed Systems Symposium 2016.

[7] Hung Dang, Yue Huang, and Ee-Chien Chang. “Evading Classifiers by Morphing in the Dark”. ACM CCS 2017.

Class 8: Testing of Deep Networks

DeepXplore: Automated Whitebox Testing of Deep Learning Systems

Kexin Pei, Yinzhi Cao, Junfeng Yang, Suman Jana. 2017. DeepXplore: Automated Whitebox Testing of Deep Learning Systems. In Proceedings of ACM Symposium on Operating Systems Principles (SOSP ’17). ACM, New York, NY, USA, 18 pages. [PDF]

As deep learning is increasingly applied to security-critical domains, having high confidence in the accuracy of a model’s predictions is vital. Just as in traditional software development, confidence in the correctness of a model’s behavior stems from rigorous testing across a wide variety of possible scenarios. However, unlike in traditional software development, the logic of deep learning systems is learned through the training process, which opens the door to many possible causes of unexpected behavior, like biases in the training data, overfitting, underfitting, etc. As this logic does not exist as an actual line of code, deep learning models are extremely difficult to test, and those who do are are faced with two key challenges:

- How can all (or at least most) of the model’s logic be triggered so as to discover incorrect behavior?

- How can such incorrect behavior be identified without manual inspection?

To address these challenges, the authors of this paper first introduce neuron coverage as a measure of how much of a model’s logic is activated by the test cases. To avoid manually inspecting output behavior for correctness, other DL systems designed for the same purpose are compared across the same set of test inputs, following the logic that if the models disagree than at least one model’s output must be incorrect. These two solutions are then reformulated into a joint optimization problem, which is implemented in the whitebox DL-testing framework DeepXplore.

Limitations of Current Testing

The motivation for the DeepXplore framework is the inability of current methods to thoroughly test deep neural networks. Most existing techniques to identify incorrect behavior require human effort to manually label samples with the correct output, which quickly becomes prohibitively expensive for large datasets. Additionally, the input space of these models is so large that test inputs cover only a small fraction of cases, leaving many corner cases untested. Recent work has shown that these untested cases near model decision boundaries leave DNNs vulnerable to adversarial evasion attacks, in which small perturbations to the input cause a misclassification. And even when these adversarial examples are used to retrain the model and improve accuracy, they still do not have enough model coverage to prevent future evasion attacks.

Neuron Coverage

To measure the area of the input space covered by tests, the authors define what they call “neuron coverage,” a metric analogous to code coverage in traditional software testing. As seen in the figure below, neuron coverage measures the percentage of nodes a given test input activates in the DNN, analogous to the percentage of the source code executed on code coverage metrics. This is believed to be a better measure of the robustness test inputs because the logic of a DNN is learned, not programmed, and exists primarily in the layers of nodes that compose the model, not the source code.

Cross-referencing Oracles

To eliminate the need for expensive human effort to check output correctness, multiple DL models are tested on the same inputs and their behavior compared. If different DNNs designed for the same application produce different outputs on the same input, at least one of them should be incorrect, therefore identifying potential model inaccuracies.

Method

The primary objective for the test generation process is to maximize the neuron coverage and differential behaviors observed across models. This is formulated as a joint optimization problem with domain-specific constraints (i.e., to ensure that a test case discovered is still a valid input), which is then solved using a gradient ascent algorithm.

Experimental Set-up

The DeepXplore framework was used to test three DNNs for each of five well-known public datasets that span multiple domains: MNIST, ImageNet, Driving, Contagio/Virustotal, and Drebin. Because these datasets include images, video frames, PDF malware, and Android malware, different domain-specific constraints were incorporated into DeepXplore for each dataset (e.g. pixel values for images need to remain between 0 and 255, PDF malware should still be a valid PDF file, etc.). The details of the chosen DNNs and datasets can be seen in the table below.

For each dataset, 2,000 random samples were selected as seed inputs, which were then manipulated to search for erroneous behaviors in the test DNNs. For example, in the image datasets, the lighting conditions were modified to find inputs on which the DNNs disagreed. This is shown in the photos below from the Driving dataset for self-driving cars.

Deep k-Nearest Neighbors: Towards Confident, Interpretable and Robust Deep Learning

Nicolas Papernot, Patrick McDaniel. 2018. Deep k-Nearest Neighbors: Towards Confident, Interpretable and Robust Deep Learning. [PDF]

k-Nearest Neighbors

Deep learning is ubiquitous. Deep neural networks achieve a good performance on challenging tasks like machine translation, diagnosing medical conditions, malware detection, and classification of images. In this research work, the authors mentioned about three well-identified criticisms directly relevant to the security. They are the lack of reliable confidence in machine learning, model interpretability and robustness. Authors introduced the Deep k-Nearest Neighbors (DkNN) classification algorithm in this research work. It enforces the conformity of the predictions made by a DNN model on the test data with respect to the model’s training data. For each layer in the neural network, the DkNN performs a nearest neighbor search to find training points for which the layer’s output is closest to the layer’s output on the test input. Then they analyze the assigned label of these neighboring points to make it sure that the intermediate layer’s computations remain conformal with the final output model’s prediction.

Consider a deep neural network (in the left of the figure), representations output by each layer (in the middle of the figure) and the nearest neighbors found at each layer in the training data (in the right of the figure). Drawings of pandas and school buses indicate training points. We can observe that confidence is high when there is homogeneity among the nearest neighbors labels. Interpretability of the outcome of each layer is provided by the nearest neighbors. Robustness stems from detecting nonconformal predictions from nearest neighbor labels found for out-of-distribution inputs across different layers.

Algorithm

The psudo-code for their k-Nearest Neighbors (DkNN) that the authors introduced in ensuring that the intermediate layer’s computations remain conformal with the respect to the final model’s prediction is given below-

Basis for Evaluation

Basis for evaluating robustness, interpretability, Confidence are discussed below-

Evaluation of Confidence/ Credibility

In their experiments, they measured high confidence on inputs. From the experiment they observed that credibility varies across both in- and out-of-distribution samples. They tailored their evaluation to demonstrate that the credibility is well calibrated. They performed their experiments on both benign and adversarial examples.

Classification Accuracy

In their experiment, they used three datasets. First, hand written recognition task of MNIST dataset, SVHN dataset and the third one is GTSRB dataset. In the following figure, we can observe the comparison of the accuracy between DNN and DkNN model on three different dataset.

Credibility on in-distribution samples

Reliability diagrams are plotted for the three different datasets (MNIST, SVHN and GTSRB) below:

On the left, they visualized the estimation of confidence output by the DNN softmax and it is calculated by the probability \(arg ~max_{j} ~f_j(x)\). On the right, they plotted the credibility of DkNN predictions. From the graph, it may appear that the softmax is better calibrated than the corresponding DkNN. Because its reliability diagrams are closer to the linear relation between accuracy and DNN confidence. But if the distribution of DkNN credibility values are considered then it surfaces that the softmax is almost always very confident on test data with a confidence above 0.8. DkNN uses the range of possible credibility values for datasets like SVHN (test set contains a larger number of inputs that are difficult to classify).

Credibility on out-of-distribution samples

Images from NotMNIST is identical to MNIST but the classes are non-overlapping. For MNIST, the first set of out-of-distribution samples contains images from the NotMNIST dataset. For SVHN, the out-of-distribution samples contains images from the CIFAR-10 dataset. Again, they have the same format but there is no overlap between SVHN and CIFAR-10. For both the MNIST and SVHN datasets, they rotated all the test inputs by an angle of 45 degree to generate a second set of out-of-distribution samples.

In the above figure, the credibility of the DkNN on the out-of-distribution samples is compared with the DNN softmax on MNIST (left) and SVHN (right). The DkNN algorithm has an average credibility of 6% and 9% to inputs from the NotMNIST and rotated MNIST test sets respectively, compared to 33% and 31% for the softmax probabilities. We find the same observation for SVHN model. Here, the DkNN assigns an average credibility of 15% and 18% to CIFAR-10 and rotated SVHN inputs, compared to 52% and 33% for the softmax probabilities.

Evaluation of the interpretability

Here, they have considered the model being bias to the skin color of a person. In a recent study, Stock and Cisse demonstrate how an image of former US president Barack Obama throwing an American football in a stadium ResNet model. They reproduce their experiment and apply the DkNN algorithm to this model. They plotted the 10 nearest neighbors from the training data. These neighbors are computed by the last hidden layer of ResNet model.

On the left side of the above figure, the test image that is processed by the DNN is the same as that of the one used by Stock and Cisse. It contains 7 black and 3 white basketball players. They are similar to the color and also located in the air. They assumed that the ball play an important role in prediction. So, they ran another experiment with the same image but now cropping the image to remove the ball. Now the model predictated it as he is playing racket. Neighbor in this training class are white players. Image share certain charateristics, such as the background is green and most of the people are wearing white dresses and holding there hands in the air. In this example, besides the skin color, the position and appearance of the ball also contributed to the model’s prediction.

Evaluation of Robustness

DkNN is a step towards correctly handling malicious inputs like adversarial inputs because:

- outputs more reliable confidence estimates on adversarial examples than the softmax.

- provides insights as to why adversarial examples affect undefended DNNs.

- robust to adaptive attacks they considered

Accuracy on Adversarial Examples

They crafted adversarial examples using three algorithms: Fast Gradient Sign Method (FGSM), Basic Iterative Method (BIM), and Carlini-Wagner 2 attack (CW).

All there test results are shown in the following table. They have also included the accuracy of both undefended DNN and DkNN. By observing the table, they made a conclusion that even though the attacks were successful in evading the undefended DNN, but when the model is integrated with DkNN, then some accuracy on adversarial examples is recovered.

They have plotted the reliability diagrams comparing the DkNN credibility on GTSRB adversarial examples with the softmax probabilities output by the DNN. For DkNN, credibility is low across all attacks. Here, the number of points in each bin is reflected by the red line. DkNN outputs a credibility below 0.5 for most of the inputs. This indicates a sharp departure from softmax probabilities, which classified most adversarial examples in the wrong class with a high confidence of above 0.9 for the FGSM and BIM attacks. They also made an observation that the BIM attack is more successful at introducing perturbations than that of the FGSM or the CW attacks.

Explanation of DNN Mispredictions

In the above figure, for both clean and adversarial examples, we can observe that the number of candidate labels decreases as we move up the neural network from its input layer all the way to its output layer. Number of candidate labels (in this case k = 75 nearest neighboring training representations) that match the final prediction made by the DNN is smaller for some attacks. For CW attack, the true label of adversarial examples that it produces is often recovered by the DkNN. Again, the lack of conformity between neighboring training representations at different layers of the model characterizes the weak support for the model’s prediction.

Comparison to LID

We discussed similarities between the DkNN approach and Local Intrinsic Dimensionality [ICLR 2018]. There are important differences between the approaches, but given the results reported in the Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples (discussed in Class 3) reported on LID, it is worth investigating how robust DkNN is to the same attacks. (Note that neither of these defenses are really obfuscating gradients, so the attack strategy reported in that paper is to just use high confidence adversarial examples.)

The Secret Sharer: Measuring Unintended Neural Network Memorization & Extracting Secrets

Nicholas Carlini, Chang Liu, Jernej Kos, Ulfar Erlingsson, Dawn Song. 2018. The Secret Sharer: Measuring Unintended Neural Network Memorization & Extracting Secrets. arXiv:1802.08232. [PDF]

This paper focuses an adversary targeting “secret” user information stored in a deep neural network. Sensitive or “secret” user information can be included in the datasets used to train deep machine learning models. For example, if a model is trained on a dataset of emails, some of which have credit card numbers, there is a high probability that the credit card number can be extracted from the model, according to this paper.

Introduction

Rapid adoption of machine learning techniques has resulted in models trained on sensitive user information or “secrets”. The “secrets” may include “person messages, location histories, or medical information.” The potential for machine learning models to memorize or store secret information could reveal sensitive user information to an adversary. Even black-box models were found to be susceptible to leaking secret user information. As more deep learning models are implemented, we need to be mindful of the models ability to store information, and to shield models from revealing secrets.

Contributions